Liveness Detection

Liveness detection is the process whereby facial recognition software attempts to differentiate between genuine live faces and spoofed fake faces. (e.g. photos or recorded videos) SAFR supports 4 kinds of liveness detection: 3D liveness, pose liveness, RGB liveness, and smile authorization.

The supported liveness detections are enabled and configured either via the relevant VIRGO feed properties, or via the recognition preference menus in the Desktop and/or Mobile Clients. Note that RGB liveness also needs the RGB liveness detector enabled and configured on the Detection Preferences menu in the Desktop and/or Mobile Clients.

RGB Liveness Detection

RGB liveness detection examines certain qualities of the facial image itself in order to determine liveness. It is the strongest type of liveness detection currently offered by SAFR, but it requires the cooperation of subjects in order to work. Thus, it's an excellent choice for Secure Access scenarios where subjects present themselves to a kiosk in order to gain entrance. Conversely, RGB liveness is a poor choice for surveillance-style camera deployments. (i.e. ceiling-mounted cameras, subjects' faces seen at angles, subjects' faces are in motion, etc.)

The Secure Access with RGB Liveness operator mode has been created to support the use of RGB liveness detection.

RGB liveness detection is only available on the following platforms:

- Android devices

- Windows machines containing an NVidia card that provides GPU

- Linux machines containing an NVidia card that provides GPU

Note that the RGB liveness detector (located on the Detection Preferences menu in Windows and Android) needs to be enabled for RGB liveness detection to work.

RGB Liveness Usage Best Practices

RGB liveness detection works best under the following conditions:

- Subjects must look directly at the camera. (i.e. Their center pose quality should be very high.)

- RGB liveness detection requires high resolution images. Thus, subjects' faces should be at least 150 pixels.

- Subjects' faces must remain fairly still for at least 1 second.

- Lighting must be controlled so that there is even lighting across subjects' faces.

- Ideally, there shouldn't be any backlighting.

- Subjects' faces shouldn't occupy more than 50% of the screen.

- There should be high color saturation. (i.e. colors should be vibrant)

- There should be low contrast. (i.e. no grain)

- Focus into the distance with reasonable focus in the target range. The closer the subject is to the camera, the more blur there should be.

- Exposure should yield a properly exposed face. (i.e. There should be color depth in the face rather than a washed out image.)

- The background behind the subject should be as featureless as possible. If the background has edges and/or lines, SAFR may incorrectly interpret the background lines as edges of a tablet, thus causing an erroneous NOTLIVE_CONFIRMED result to be returned.

RGB Liveness Algorithm and Settings

RGB liveness detection uses one or two of the following models to test for liveness, depending on the Detection scheme that has been selected in the Detection Preferences menu:

- Texture Model: Examines the texture and color of the face. We recommend that you don't use the Texture model due to occasional failures to detect spoofed faces.

- Context Model: Examines the context around the face, rather than the face itself.

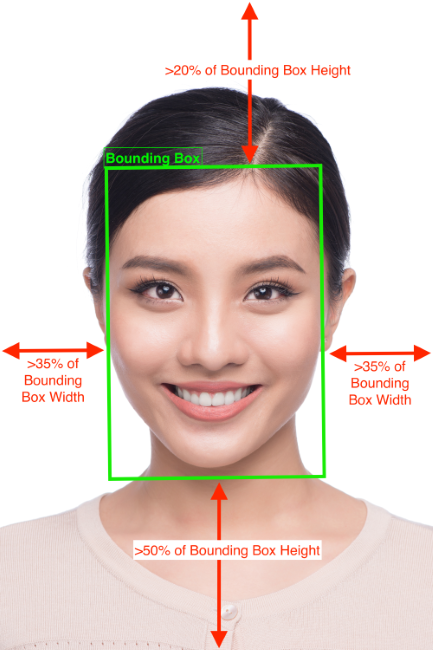

- Hybrid Model: Examines both the texture and context. To successfully use the Hybrid model, the following must be true.

- At least 35% of the aspect-corrected width of the detected face bounding box must appear to the left and right of the detected face.

- At least 20% of the aspect-corrected height of the detected face bounding box must appear above the detected face.

- At least 50% of the aspected-corrected height of the detected face bounding box must appear below the detected face. (i.e. There must be more context below than above the detected face.)

There are seven Detection schemes settings available:

- Texture Unimodal: Only the Texture model will be used. Due to problems with the Texture model, we don't recommend using this setting.

- Context Unimodal: Only the Context model will be used.

- Hybrid Unimodal: Only the Hybrid model will be used. The Hybrid model uses both texture and context evaluation to evaluate liveness. This is the preferred Detection scheme for most scenarios.

- Context Multimodal: Both the Context and Hybrid models will be used. Subjects pass the RGB liveness test when the result of either model meets or exceeds the Liveness detection threshold value. This value should be used instead of Hybrid Unimodal when false positives need to be reduced at the expense of having more false negatives.

- Strict Multimodal: Both the Texture and Context models will be used. For a subject to pass the RGB liveness test, both of the results of the models must meet or exceed the Liveness detection threshold value. This is the default option. Due to problems with the Texture model, we don't recommend using this setting.

- Normal Multimodal: Both the Texture and Context models will be used. For a subject to pass the RGB liveness test, the average of the results of the two models must meet or exceed the Liveness detection threshold value. Due to problems with the Texture model, we don't recommend using this setting.

- Tolerant Multimodal: Both the Texture and Context models will be used. Subjects pass the RGB liveness test when the result of either model meets or exceeds the Liveness detection threshold value. Due to problems with the Texture model, we don't recommend using this setting.

When a model is used, the following steps are performed:

- N frames of the subject's face are evaluated for liveness, as specified by the Evaluate liveness over N frames setting.

- If at least Minimum confirmations required x Evaluate liveness over N frames frames are encountered where the returned liveness value is equal to or greater than the Liveness detection threshold setting, then rgbLivenessState.currentState is set to LIVENESS_CONFIRMED for that subject.

- If rgbLivenessState.currentState has not been set to LIVENESS_CONFIRMED, then N frames of the subject's face are evaluated for fake, as specified by the Evaluate fake over N frames setting.

- If at least Minimum confirmations required x Evaluate fake over N frames frames are encountered where the returned liveness value is less than the Fake detection threshold setting, then rgbLivenessState.currentState is set to NOTLIVE_CONFIRMED for that subject.

- If after both evaluations LivenessConfirmed isn't set to LIVENESS_CONFIRMED or NOTLIVE_CONFIRMED, then rgbLivenessState.currentState will be set to LIVENESS_UNKNOWN. Note that rgbLivenessState.currentState will also be set to LIVENESS_UNKNOWN if the quality of the subject's facial image doesn't meet the specified thresholds. (i.e. the size, center pose, sharpness, and/or contrast of the subject's facial image are so poor that RGB liveness detection can't even be attempted.)

If Strict Multimodal, Normal Multimodal, or Tolerant Multimodal have been selected for Detection scheme, the Texture model will be used to evaluate liveness first. If the returned liveness value is lower than the Minimum preliminary liveness threshold setting, then no further computations are performed and the liveness value from the Texture Model, capped at Liveness detection threshold = 0.01, is returned. If the returned liveness value is equal to or greater than the Minimum preliminary liveness threshold setting, then the Context model is also used to evaluate liveness.

RGB Liveness Events

When an RGB liveness check completes and returns either LIVENESS_CONFIRMED or NOTLIVE_CONFIRMED, an event is generated with the following attributes:

- "type": "action",

- "actionId": "rgbLiveness",

- "liveness": liveness confidence range. This value ranges from 0.0 to 1.0.

- "livenessConfirmed": "true" or "false", depending on the result of the RGB liveness check.

RGB Liveness Troubleshooting

If you're getting incorrect liveness results, try doing the following.

- Ensure that the subject's face is uniformly lit and isn't washed out.

- Try changing the background to a plain design. (i.e. make sure the background doesn't contain any lines)

If you'd like the SAFR team to investigate your issue, please capture video recorded directly from the camera (i.e. not screen captures) to enable the SAFR team to reproduce the issue.

Pose Liveness Detection

Windows, macOS, Linux, and iOS only.

With pose liveness detection, subjects are asked to approach the camera and move their head in some way. (e.g. turn it the left) Pose liveness uses the subject's center pose quality, both before and after the head movement, to determine their liveness.

Pose liveness is almost impossible to fool with photos, but recorded videos can sometimes fool it if the person in the recording moves their head in the way that the pose liveness detector is expecting.

The Secure Access with Profile Pose operator mode has been created to support the use of pose liveness detection.

Pose Liveness Setup

Pose liveness detection works best under the following conditions:

- Cameras need to support at least 30 frames/second.

- The ideal location is well lit with flood lighting. The results will be better if the lighting is consistent around the area where the camera tracks subjects' faces. Both backlighting and areas of dark spots can reduce the effectiveness of the pose liveness detector.

- The camera should be set up close to a door and facing so that it is looking at the people coming towards the door. It should be placed so that it is at anm angle of about 35 degrees with respect to the door. It should also be set to a semi-wide angle so the tracking area is larger.

- The camera should be able to detect and recognize faces about 12-21 feet away. At this initial recognition point, subjects' faces should mostly be looking straight at the camera.

- When subjects are about 3 feet from the camera, their faces should appear closer to a profile image due to the camera angle. This is when the pose liveness detection occurs.

- Pose liveness can operate with various camera resolutions and frame rates. However, frame rates should be at least 15fps and resolutions should be at least 1080p. 30 fps and 4k resolution will achieve the best results.

Pose Liveness Events

When a pose liveness check successfully completes, an event is generated with the following attributes:

- "type": "action",

- "actionId": "poseLiveness",

Smile Authorization

Smile authorization uses the smile action to test subjects' liveness. With smile actions, subjects are asked to approach a camera and then smile. This form of liveness detection is very difficult to spoof with a photo, but recorded videos can occasionally fool it.

The Secure Access with Smile operator mode has been created to support the use of smile authorization.

Smile Authorization Events

When a smile action check completes, an event is generated with the following attributes:

- "type": "action",

- "actionId": "smileToActivate",

- "smile": "true" or "false", depending on the result of the smile authorization check.

Smile Authorization with RGB Liveness Detection

One of the configuration options for the smile action on the Recognition Preferences menu is to run the smile action and RGB liveness detection at the same time. The Secure Access with Smile and RGB Liveness operator mode has been created to support the use of RGB liveness detection.

Smile authorization with RGB liveness detection is only available on the following platforms:

- Windows machines containing an NVidia card that provides GPU

- Linux machines containing an NVidia card that provides GPU

Note that the RGB liveness detector (located on the Detection Preferences menu in Windows) needs to be enabled for RGB liveness detection to work.

Smile Authorization with RGB Liveness Detection Events

When a smile authorization with RGB liveness check completes, an event is generated with the following attributes:

- "type": "action",

- "actionId": "smileToActivate",

- "liveness": liveness confidence range. This value ranges from 0.0 up to 1.0.

- "livenessConfirmed": "true" or "false", depending on the result of the RGB liveness check.

3D Liveness Detection

Windows only.

3D liveness is a special feature of certain Intel RealSense camera models that allows them to distinguish flat images from 3 dimensional ones, thus allowing SAFR to tell the difference between a real face and a photo or video. This feature only works with Intel RealSense D415 and D435 cameras.

This form of liveness detection allows you to offload the work of liveness detection to your cameras, thus reducing the workload on the rest of your system.

3D Liveness Events

When a 3D liveness check completes, an event is generated with the following attributes:

- "type": "action",

- "actionId": "3dLiveness",

- "liveness": liveness confidence range. This value ranges from 0.0 up to 1.0.

- "livenessConfirmed": "true" or "false", depending on the result of the 3D liveness check.